If you’re running a WordPress website, you’ve probably come across terms like robots.txt and SEO. Don’t worry if you’re unsure about what they mean or how to use them.

In this beginner-friendly guide, we’ll explore what the robots.txt file is, why it’s important for your website’s SEO, and how you can easily edit it using the RankMath SEO plugin.

We’ll take you through each step in detail, ensuring you understand everything—even if you’ve never used RankMath or heard of robots.txt before!

What is a Robots.txt File?

The robots.txt file is a small text file located in the root directory of your website. Its primary function is to tell search engine bots (like Google’s Googlebot) which pages or sections of your site they can and cannot access.

Think of the robots.txt file as a “gatekeeper” for your website’s content. While you want search engines to crawl and index your important pages, you might also want to prevent them from indexing private or irrelevant pages.

For example, you may not want Google to index your admin login page or certain duplicate content sections.

Why Should You Care?

Proper configuration of the robots.txt file can significantly impact your site’s performance in search results. It can:

- Help search engines focus on important content, improving your SEO.

- Prevent unnecessary or sensitive pages from being indexed.

- Control how much of your website gets crawled, which can help manage your “crawl budget” (the number of pages search engines like Google are willing to crawl in a given period).

Why Use RankMath for Robots.txt?

You might be wondering why you need a plugin like RankMath to edit your robots.txt file when you can manually upload or edit it via FTP. The reason is simple: RankMath makes the entire process easy, beginner-friendly, and secure.

If you’re unfamiliar with WordPress or FTP (File Transfer Protocol), RankMath provides an easy-to-use interface to manage your robots.txt file right from your WordPress dashboard—no coding or technical knowledge required. Plus, RankMath is more than just an SEO plugin; it helps optimize your entire site for better search engine performance.

Step-by-Step Guide to Editing Robots.txt in WordPress with RankMath

Now that we know why robots.txt is important, let’s go through the process of editing it step by step, from installing RankMath to configuring the file.

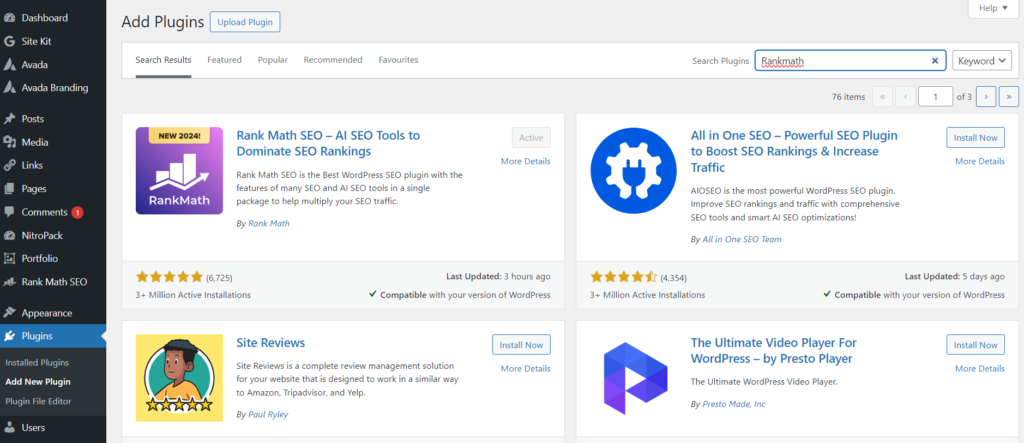

Step 1: Install and Activate RankMath SEO Plugin

If you don’t already have RankMath installed, here’s how you can do it:

Go to your WordPress Dashboard: This is the control center of your website. On the left-hand side, you’ll see a menu with several options.

Click on “Plugins” and then select “Add New.”

In the search bar, type RankMath SEO and press enter. The RankMath SEO plugin should be one of the first results.

Click “Install Now” next to the RankMath plugin.

After installation, click “Activate” to enable the plugin.

Step 2: Set Up RankMath for SEO

Once RankMath is activated, it will automatically take you through a setup wizard that will configure basic SEO settings for your website. Don’t skip this step! This wizard helps RankMath understand your website’s needs, so it can suggest the best optimization strategies.

- Follow the on-screen instructions for setting up your website’s SEO preferences. You can select options for business type, integrations, and general SEO settings.

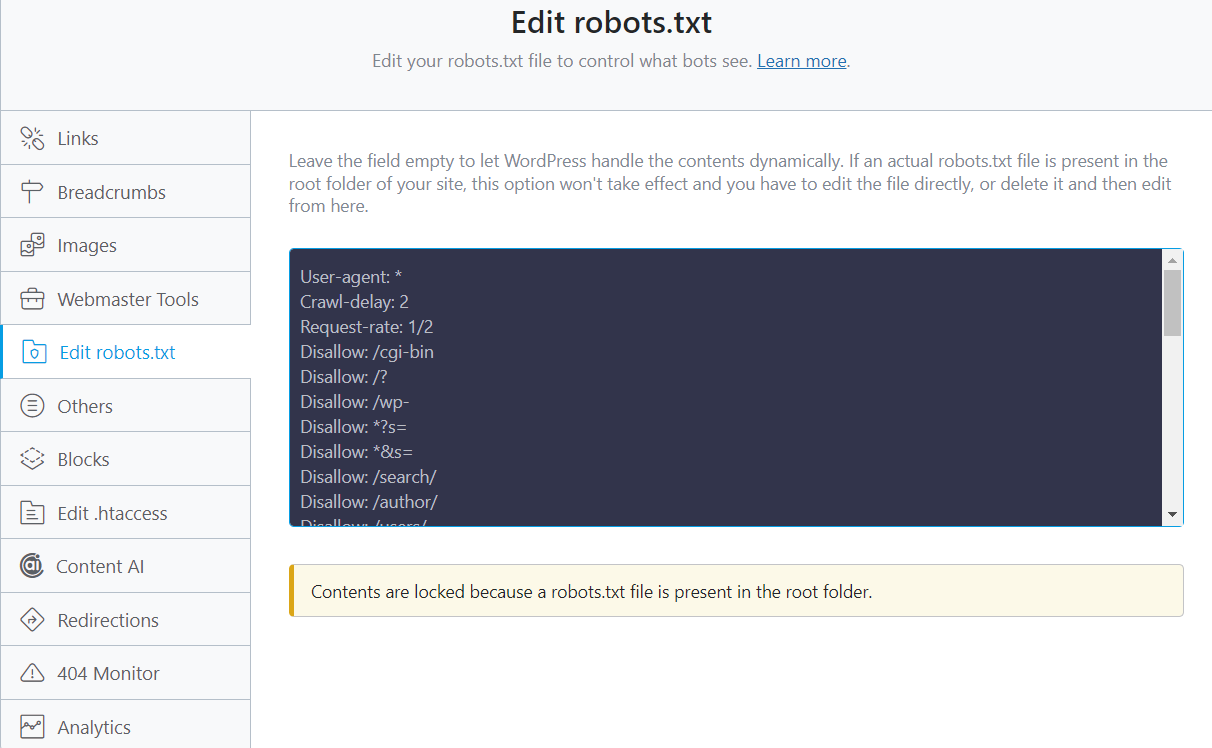

Step 3: Access Robots.txt Editor in RankMath

Now that RankMath is set up, you can edit your robots.txt file easily.

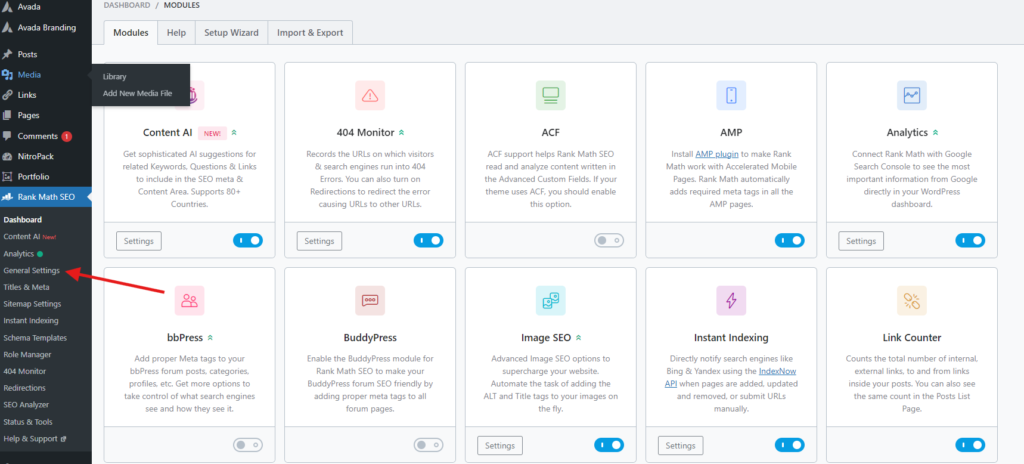

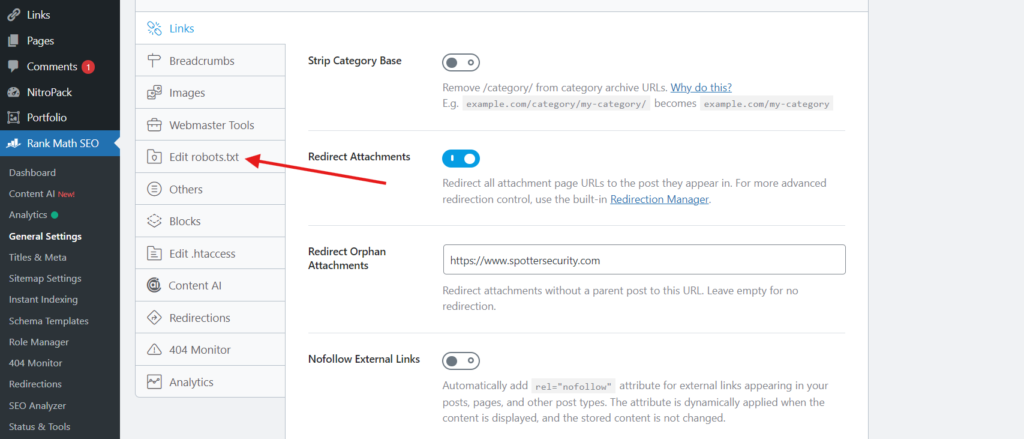

Navigate to RankMath Settings: From your WordPress dashboard, locate the RankMath menu on the left-hand side. Click on “General Settings” under RankMath.

- Find the Robots.txt Settings: Scroll down in the General Settings until you see the Robots.txt option. Click on it to open the editor.

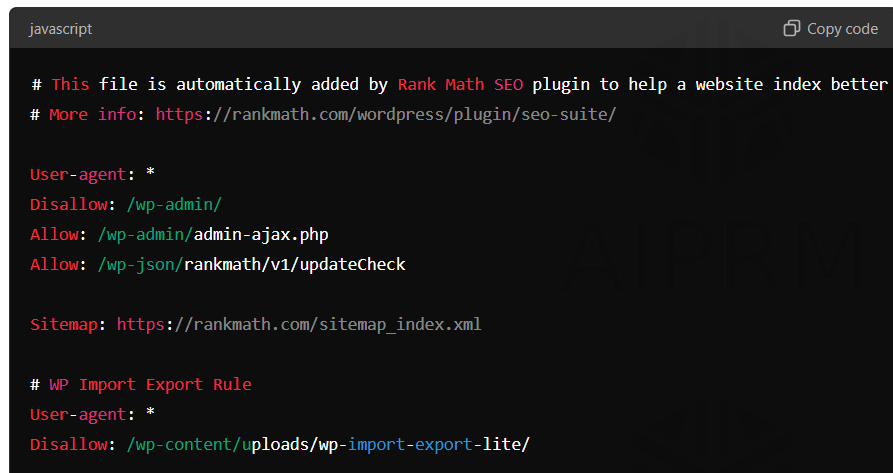

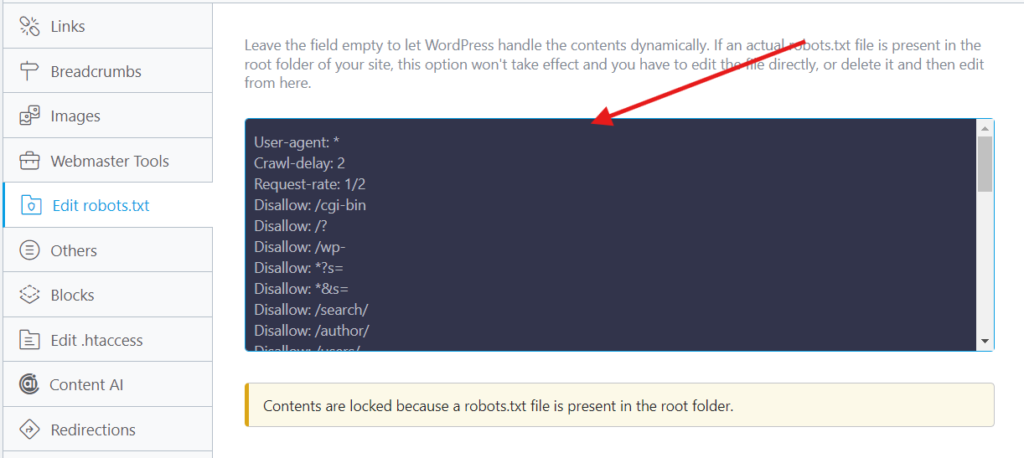

Step 4: Understanding the Default Robots.txt File

When you open the editor, you’ll see a default robots.txt file already created by RankMath. This file might already have a few entries, such as allowing all search engines to crawl your website or disallowing access to specific folders (like your WordPress admin panel).

Here’s an example of a basic robots.txt file:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

- User-agent refers to the bots (like Googlebot) that will follow the instructions.

- Disallow tells the bots which parts of your site they cannot access.

- Allow explicitly permits certain parts of your site to be crawled, even if they are within a disallowed directory (like admin-ajax.php).

Step 5: Customizing Your Robots.txt File

Now that you know the basics, let’s add or modify the rules in your robots.txt file.

- Block a Specific Page or Directory: If there’s a section of your site you want to keep private or prevent from being indexed, add this to your robots.txt file:

User-agent: *

Disallow: /private-directory/

/private-directory/ with the URL of the folder or page you want to block.

- Allow Certain Pages to Be Indexed: If you want to allow search engines to crawl a specific page while blocking the rest, use:

User-agent: Googlebot

Allow: /public-page/

Disallow: /private-page/

- Prevent Images from Being Indexed: If your site has images that you don’t want to appear in search results, add this:

User-agent: *

Disallow: /wp-content/uploads/

This will block search engines from indexing all images in your uploads folder.

- Point to Your Sitemap: It’s always a good idea to help search engines find your sitemap for better indexing. Add this line at the bottom of your robots.txt file:

Sitemap: https://www.yourwebsite.com/sitemap.xml

Step 6: Save and Test Your Changes

Once you’ve made the necessary changes to your robots.txt file, click the Save Changes button at the bottom of the RankMath editor.

But don’t stop there! It’s crucial to test your robots.txt file to make sure you haven’t accidentally blocked important sections of your website. You can use Google Search Console’s robots.txt tester to see if your file is configured correctly.

Best Practices for Optimizing Your Robots.txt File

As you customize your robots.txt file, keep these best practices in mind:

Don’t Block Important Pages: Be careful not to disallow URLs that are essential for your SEO efforts, such as product or service pages.

Keep It Simple: Avoid overly complicated rules. A simple, clean robots.txt file is more effective.

Use Robots.txt Along with Meta Tags: In some cases, you may want to use both robots.txt and noindex meta tags to have more granular control over your website’s visibility.

Update as Needed: Your robots.txt file should evolve as your website changes. Regularly review and update it to ensure it reflects your current site structure.

Use RankMath to Edit Robots.txt Like a Pro

Congratulations! You’ve now learned what the robots.txt file is, why it’s important for your SEO, and how to edit it using the RankMath SEO plugin. By following the steps in this guide, even beginners can confidently manage their website’s visibility in search engines.

By optimizing your robots.txt file, you’re taking another step toward improving your site’s SEO performance, ensuring search engines focus on the right content, and keeping private or irrelevant pages out of their index.

Still not using RankMath? Download RankMath for Free and start optimizing your WordPress site today!